we are in the process of using machine learning, which is just glorified statistics, to analyse more and more aspects of our society and our very beings. But what if we don't like the outcome? Reflections on oneself can be painful. Sure, we can always deny the results we don't like and it sure doesn't hurt to be sceptical. We can argue that machine learning learns from human data and is thus bound to acquire human biases. However, what if these biases are reminiscent of truth? Will humanity be comfortable looking into the mirror it just invented?

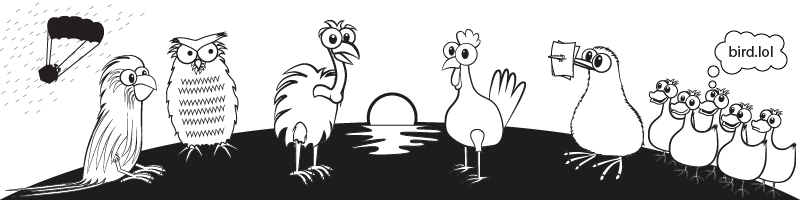

The Controversy